In the previous chapter, we learnt the concepts of clusters i.e. how to bring up a k8s cluster. In this chapter, we are gonna learn how to spawn/run a simple nginx application on a k8s cluster.

To learn about this, let's find out what is a controller and what are workloads ?

- Controller: A controller in Kubernetes is what takes care of tasks to make sure the desired state of the cluster matches the observed state.

- Workloads: An application running on Kubernetes is considered to be a workload. Kubernetes Pods run as a single or multiple component when working with each other. A Kubernetes Pod constitutes a set of running containers in a cluster. Pods are not required to be managed directly since users can employ workload resources that manage the Pod sets for us.

Kubernetes provides us with several built-in workload resources such as:

- ReplicaSets: A ReplicaSet is one of the Kubernetes controllers that makes sure we have a specified number of pod replicas running.

- Deployments: Deployments provides declarative updates for Pods and ReplicaSets. We describe a "desired state" in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate. We can define Deployments to create new ReplicaSets, or to remove existing Deployments and adopt all their resources with new Deployments.

- StatefulSet: A StatefulSet lets you run one or more related Pods that do track state. For example, if our workload records data persistently, we can run a StatefulSet that matches each Pod with a Persistent Volume for example:- MySQL, Redis, Cassandra, etc. i.e. the applications which are Stateful.

- DaemonSet — A DaemonSet ensures that some or all nodes run a replica of a Pod. When nodes are added to the cluster, Pods are added to them. When nodes are removed from the cluster, those Pods are garbage collected. Deleting a DaemonSet will clean up the Pods it created. Every time a node is added to the cluster that matches the specification in the DaemonSet and the control plane sets up a Pod for that DaemonSet on the new node.

- Job and CronJob — These define tasks that run to completion and then stop their execution. CronJobs recur according to a schedule i.e. hourly, every 6 hours, etc. Job and CronJob define tasks that run to completion and then stop the execution. Jobs represent one-off tasks, whereas CronJobs recur according to a schedule defined.

Now, let's try to run a sample nginx application on our kubernetes cluster.

Firstly, let's check if our nodes are ready. For this example, we are running it on our local host using minikube.

kubectl get nodes

So, this depicts that our minikube cluster is ready and we can now add pods to our minkube cluster.

kubectl get pods

We don't have any pods running right now.

Let's create a file called Deployment.yaml in which we add the below code:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment # Name of the deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx # Name of the container

image: nginx:latest # Pulls latest image from dockerhub

imagePullPolicy: Always # Always pulls the image

ports:

- containerPort: 80 # Service port

protocol: TCP

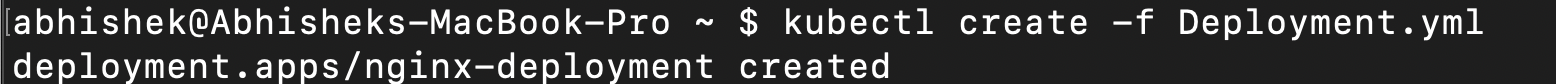

To create the pod:-

kubectl create -f Deployment.yml

To check the status of the pods:-

kubectl get pods

To check the logs of the pod:-

kubectl logs -f nginx-deployment-8d545c96d-nqzgfwhere "nginx-deployment-8d545c96d-nqzgf" is the name of the pod and "logs -f" will follow/tail the logs.

To check the deployments:-

kubectl get deployments

Now, the pod is running fine. We verify it by running kubectl get pods

Next, we want to access the nginx endpoint, we need to create a "service" for the same and expose it to some port.

Create a file called "Service.yml" with the below code:

kind: Service

apiVersion: v1

metadata:

name: nginx

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- port: 80

protocol: TCP

targetPort: 80

type: ClusterIPkubectl create -f Service.yml

Here, ClusterIP gives us access to the service inside the cluster. The CLUSTER-IP you get when calling kubectl get services is the IP assigned to this service within the cluster internally.

Next, we want to access the nginx homepage via browser.

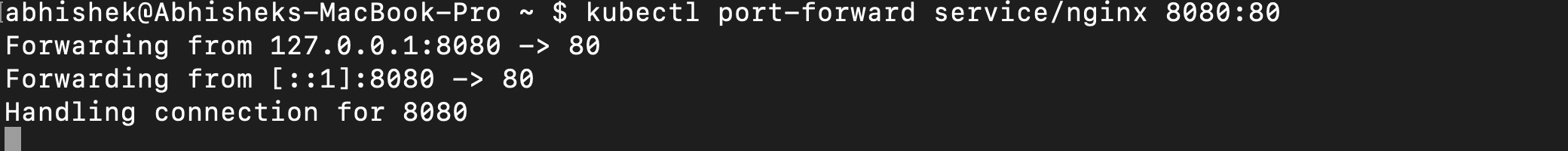

Let's do a port forwarding to access it on port 8080

kubectl port-forward service/nginx 8080:80

Now, we go to our browser at http://127.0.0.1:8080 and voila

The above port-forward command is similar to:

docker run -p 8080:80 nginx:latestSuppose, we do not want to use port forwarding for nginx, let's change the type: ClusterIP to type: LoadBalancer

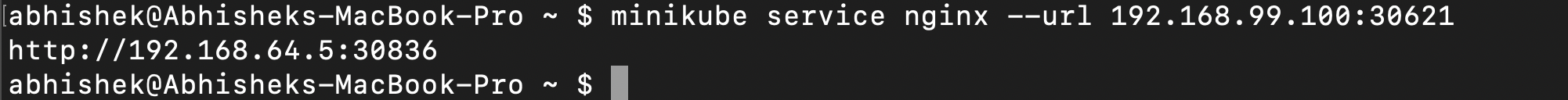

Since, our local machine does not have any LoadBalancer as in the case of EKS provided by AWS, let's run the following command:

minikube service nginx --url 192.168.99.100:30621

Now, let's copy the ip provided i.e. http://192.168.64.5:30836/ on to the browser and voila, it works.